-

Property & Casualty

Property & Casualty Overview

Property & Casualty

We offer a full range of reinsurance products and the expertise of our talented reinsurance team.

Expertise

Publication

Structured Settlements – What They Are and Why They Matter

Publication

PFAS Awareness and Concern Continues to Grow. Will the Litigation it Generates Do Likewise?

Publication

“Weather” or Not to Use a Forensic Meteorologist in the Claims Process – It’s Not as Expensive as You Think

Publication

Phthalates – Why Now and Should We Be Worried?

Publication

The Hidden Costs of Convenience – The Impact of Food Delivery Apps on Auto Accidents

Publication

That’s a Robotaxi in Your Rear-View Mirror – What Does This Mean for Insurers? -

Life & Health

Life & Health Overview

Life & Health

We offer a full range of reinsurance products and the expertise of our talented reinsurance team.

Publication

Key Takeaways From Our U.S. Claims Fraud Survey

Publication

Favorite Findings – Behavioral Economics and Insurance

Publication

Individual Life Accelerated Underwriting – Highlights of 2024 U.S. Survey

Publication

Can a Low-Price Strategy be Successful in Today’s Competitive Medicare Supplement Market? U.S. Industry Events

U.S. Industry Events

Publication

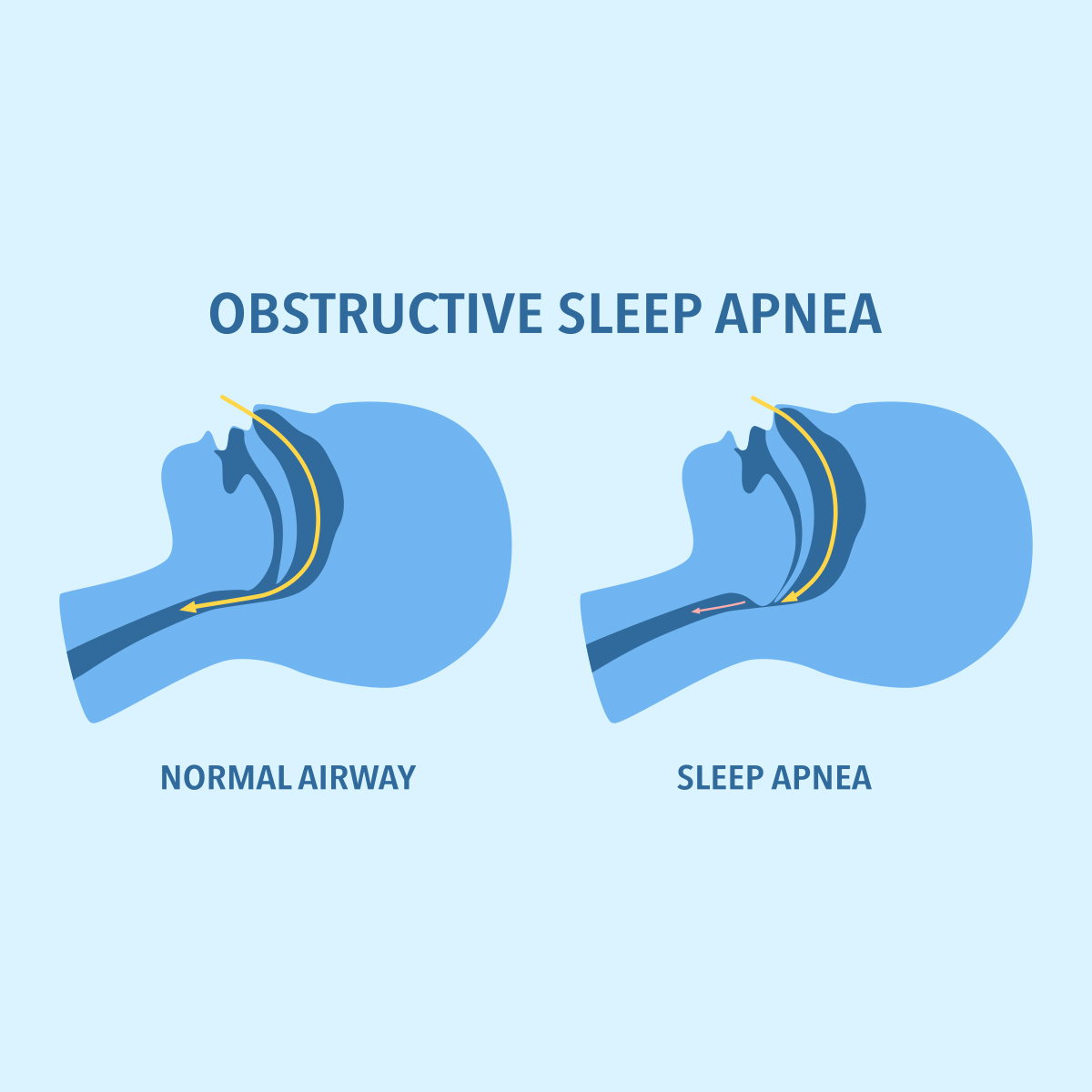

The Latest in Obstructive Sleep Apnea -

Knowledge Center

Knowledge Center Overview

Knowledge Center

Our global experts share their insights on insurance industry topics.

Trending Topics -

About Us

About Us OverviewCorporate Information

Meet Gen Re

Gen Re delivers reinsurance solutions to the Life & Health and Property & Casualty insurance industries.

- Careers Careers

Benefits of Generative Search: Unlocking Real-Time Knowledge Access

August 16, 2023

Frank Schmid

English

The introduction of OpenAI’s ChatGPT in November 2022 marked a significant technological achievement, offering the public a powerful AI tool free of charge. This AI chatbot quickly gained global popularity, becoming the go‑to solution for various tasks, including text summarization, language translation, code snippet generation, and creative writing. With its diverse creative output in formats like letters, emails, and blogs, ChatGPT proved to be a versatile and valuable tool for users.

In March 2023, Google’s Bard entered the scene as a formidable competitor to ChatGPT, offering an experimental free service to users.

How AI Chatbots Work

Both ChatGPT and Bard are powered by pre-trained Large Language Models (LLMs): GPT (Generative Pre-trained Transformer) for ChatGPT and PaLM (Pathways Language Model) for Bard. These LLMs are deep learning algorithms, extensively trained on datasets comprising publicly available information from the web, possibly supplemented by data from private sources and synthetic, computer-generated data. Notably, LLMs are not limited to textual data alone, as they can be pre-trained on code from various programming languages and on mathematical equations, as well as images (and their captions), and audio recordings (and their transcripts) to emulate the multimodality of human cognition.

The underlying architecture of LLMs is the Transformer model, which incorporates a self-attention mechanism that enables it to grasp long-range dependencies within text sequences. By breaking down text into tokens (words and sub-words), and predicting the next token based on the context of preceding ones, LLMs gain an understanding of language patterns, grammar, and semantic relationships, enabling coherent and contextually relevant responses to user input.

LLM Limitations

Despite their impressive capabilities, LLMs do have certain limitations when it comes to knowledge access:

- Factual Accuracy – When generating text, an LLM is tasked with predicting the next token based on its highest probability. This does not invariably result in a statement that is most probable to be factual.

- Lack of Post-Training Learning – LLMs do not learn from experiences post-training, and their knowledge is based on the data available up to their training cutoff date.1

- Source Verification – LLMs do not provide the sources of generated content, which makes it challenging to verify the information they present.

To address some of these limitations and enable real-time knowledge access, LLMs can be combined with retriever models (RMs) in a unified architecture known as RALM (Retriever-Augmented Language Model). This integration empowers LLMs with search engine capabilities, leading to instant knowledge retrieval. Prominent examples of such AI systems include Bing Chat, Bard, and Google’s SGE (Search Generative Experience), all of which use the Dense Passage Retriever (DPR) as their retriever model.2

Generative Search Engines

Generative search engines, like Bing Chat and Google’s SGE, leverage their LLMs’ generative abilities to interpret user queries, summarize information from the search engine, and engage users in conversational interactions.3 This interactive process allows the AI system to understand the query’s meaning and the user’s interests, though the working memory is limited to the specific chat instance.

While generative search engines offer numerous advantages, they may struggle with complex instructions. However, users can adopt different personas during interactions, helping to provide relevant context. For example, when asking about Oppenheimer, all three systems (Bing Chat, SGE, and Bard) provide information on the person Oppenheimer, or, if prompted as a movie critic, they return information about the movie released in the summer of 2023.4

Generative search has the potential to revolutionize knowledge access for various domains, including business leaders, underwriters, and claims staff in insurance and reinsurance. By offering contextual understanding, detailed and informative answers, factual topic summaries, and content generation capabilities, generative search presents a novel approach to accessing, integrating, and leveraging public knowledge in decision-making processes.

Knowledge Mining and Decision Support

Contextual Understanding – Generative AI models excel at understanding the context of a query, enabling them to interpret complex questions and provide relevant answers – leading to more accurate and helpful responses as compared to traditional keyword-based search engines.

Detailed and Informative Answers – Instead of offering short snippets or links to relevant pages, generative search can generate detailed and informative answers directly – giving users well-structured responses, potentially saving time and effort finding information.

Factual Topic Summaries – Generative AI can condense large volumes of information into concise summaries – making it easier for users to get a quick overview of a topic without diving into multiple sources.

Content Generation – One of the most exciting aspects of generative search is its ability to create new content – including being able to automatically draft risk assessments given the latest publicly available information, draft position papers on potential courses of actions, and draft training and educational material on evolving topics.

Generative search holds great promise in transforming the way we access and utilize information for improved efficiency and effectiveness in our decision‑making.

This blog was authored by Frank Schmid and reflects his thoughts; however, it was enhanced using Generative AI prior to Gen Re’s editorial and legal review.

Endnotes

- See OpenAI, “GPT‑4,” https://openai.com/research/gpt-4, and Google AI, “PaLM API: Models,” https://developers.generativeai.google/models/language. The learning process of these LLMs from user interaction is restricted to a post-training alignment process, which aims to improve factual accuracy and ensure adherence to desired behavior. This alignment process is achieved through RLHF (Reinforcement Learning by Human Feedback), a type of reinforcement learning where the AI system receives feedback from human trainers. RLHF is implemented when the LLM is made available on an experimental basis, as is the case with the free version of ChatGPT and with Google Bard.

- Bing Chat and SGE are offered as experimental free services and utilize RLHF. However, Bing Chat Enterprise, a licensed service, does not employ RLHF. For details on the data privacy policy of Bing Chat Enterprise, see https://learn.microsoft.com/en-us/bing-chat-enterprise/privacy-and-protections.

- Although Bard retrieves real-time information from the web, it does not provide sources unless it quotes at length from a webpage or returns an image. See Google Bard FAQ “How and when does Bard cite sources in its responses?”, https://bard.google.com/faq#citation.

- “I am a movie critic. Please tell me about Oppenheimer.”