-

Property & Casualty

Property & Casualty Overview

Property & Casualty

We offer a full range of reinsurance products and the expertise of our talented reinsurance team.

Expertise

Publication

Florida Property Tort Reforms – Evolving Conditions

Publication

Is Human Trafficking the Next Big Liability Exposure for Insurers?

Publication

When Likes Turn to Lawsuits – Social Media Addiction and the Insurance Fallout

Publication

Generative Artificial Intelligence and Its Implications for Weather and Climate Risk Management in Insurance

Publication

Engineered Stone – A Real Emergence of Silicosis

Publication

Who’s Really Behind That Lawsuit? – Claims Handling Challenges From Third-Party Litigation Funding -

Life & Health

Life & Health Overview

Life & Health

We offer a full range of reinsurance products and the expertise of our talented reinsurance team.

Publication

Understanding Physician Contracts When Underwriting Disability Insurance

Publication

Voice Analytics – Insurance Industry Applications [Webinar]

Publication

GLP-1 Receptor Agonists – From Evolution to Revolution U.S. Industry Events

U.S. Industry Events

Publication

Always On: Understanding New Age Addictions and Their Implications for Disability Insurance

Publication

Dying Gracefully – Legal, Ethical, and Insurance Perspectives on Medical Assistance in Dying -

Knowledge Center

Knowledge Center Overview

Knowledge Center

Our global experts share their insights on insurance industry topics.

Trending Topics -

About Us

About Us OverviewCorporate Information

Meet Gen Re

Gen Re delivers reinsurance solutions to the Life & Health and Property & Casualty insurance industries.

- Careers Careers

Algorithmic Accountability and Proxy Discrimination in Life Insurance – the Regulatory Environment [Part 2 of 2]

November 30, 2022

Dr. Thomas Ashley,

Brice Ballard

Region: North America

English

Life insurance carriers today encounter two areas where U.S. regulators have established obligations regarding the insurers’ use of external consumer data: state laws and the National Association of Insurance Commissioners (NAIC). We’ll discuss the implication for Life underwriting and risk-based pricing:

- Colorado adopted state Senate Bill (SB21-169) Protecting Consumers from Unfair Discrimination in Insurance Practices in 2021. The legislation holds insurers accountable for testing their big data systems - including external consumer data and information sources, algorithms, and predictive models - to ensure they are not unfairly discriminating against consumers who fall within a protected class.1

- NAIC has adopted definitions and interrogatories regarding accelerated underwriting in Market Conduct Annual Statement Life & Annuities Data Call & Definitions.2

After looking at Disparate Impact Testing in our previous blog, where we provided background on the concepts of algorithmic accountability and proxy discrimination, this blog will describe the current, evolving regulatory environment and make some projections about the future.

Colorado

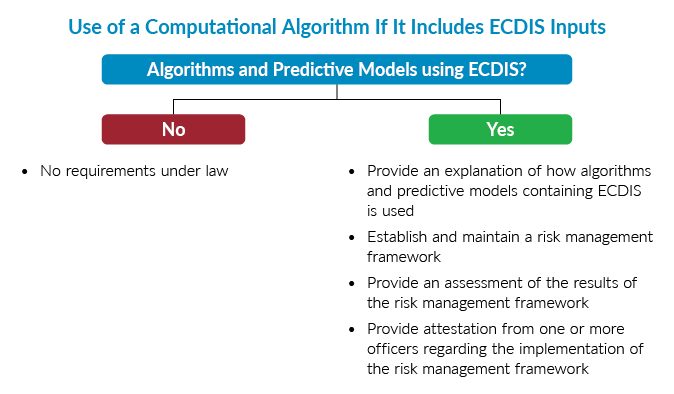

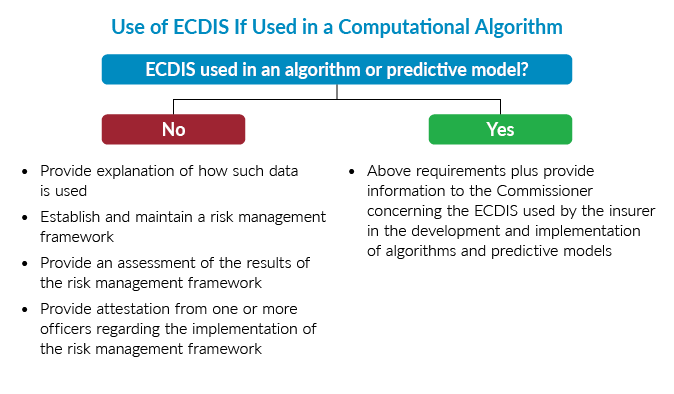

The Colorado statute ranges widely over insurance practices in all lines of business and all protected classes. Practices extend beyond underwriting to marketing and claims management. It responds to concern over use of computational algorithms that utilize “external consumer data and information sources” (ECDIS).

The statute delegates implementation to the commissioner, Department of Insurance, who has held four stakeholder meetings. In order to assist the legislative process, the ACLI drafted and presented a set of comprehensive proposed regulations.3

Colorado establishes two conditions for analysis of unfair discrimination and obligations for reporting and managing use of ECDIS. The main purpose of the analysis and reporting is to examine potential unfair discrimination.

Definitions

Every element of this framework requires specific definitions. Both the proposed regulation by the American Council of Life Insurers (ACLI) and the Colorado law provide them.

- “Algorithm” means a computational or machine learning process that informs human decision making in insurance practices.

- “Computational” means a set of rules used in a computerized process. This does not include an automation of an arithmetic calculation or a human decision-making process.

- “External Consumer Data and Information Source” means a data or an information source that is used by a Life Insurer to supplement traditional underwriting or to establish lifestyle indicators that are used in Underwriting. This term includes third-party credit scores, social media habits, purchasing habits, home ownership, educational attainment, and licensures. Except for the specific factors listed above in this definition, this term excludes: (a) traditional Life Insurer Underwriting factors, such as medical information, family history, occupational, disability, or behavioral information related to a specific individual; (b) the financial history of a specific individual that is correlated with mortality, morbidity and longevity risk, including but not limited to a specific individual’s personal history of bankruptcies, liens and outstanding debt; (c) income, assets or other elements of a specific person’s financial profile that a Life Insurer uses to determine insurable interest, suitability or eligibility for coverage; or (d) digitized or other electronic forms of the information listed in clauses (a), (b) and (c) above, including, for example electronic medical records, electronic motor vehicle reports.

Noteworthy in the description of ECDIS are the examples and the exclusions. The examples include non‑FCRA (Fair Credit Reporting Act) compliant evidence that is prohibited in underwriting but allowed in marketing. Facial and vocal recognition were often cited in meetings. The exclusions represent “safe harbor” to protect continued use of evidence never questioned before the recent use of algorithms and models. The definitions highlight the difficulty of classification of each element of evidence and the need to maintain access to some ECDIS for purposes other than risk classification.

Discrimination is one of the hardest definitions.

“Unfairly discriminate” and “unfair discrimination” include the use of one or more external consumer data and information sources, as well as algorithms or predictive models using external consumer data and information sources, that have a correlation to race, color, national or ethnic origin, religion, sex, sexual orientation, disability, gender identity, or gender expression, and that use results in a disproportionately negative outcome for such classification or classifications, which negative outcome exceeds the reasonable correlation to the underlying insurance practice, including losses and costs for underwriting.

This definition still omits crucial features. When does negative outcome reach disproportionate or exceed reasonable correlation?

Although the language covers all protected classes, race dominates the present discussion. Some categories of discrimination could conflict with effective insurance practice. Can we continue to protect underwriting for disabilities in the sale of Individual Disability insurance? Does gender-specific pricing stand? And the big question about race and many other categories of discrimination is “How can a carrier analyze this demographic information that it does not possess?”

Colorado has exposed some measures on implementation of the statute. They include a survey and a data call. To match the analysis work to department resources, Colorado will sample carriers with in‑state business. They have identified 25 carriers with the highest number of in‑force Individual Life policies. Both the ranking and the analysis will examine Individual Life only, excluding (for now at least) Individual Disability Income and Long Term Care. From the top 25, Colorado has randomly selected five carriers for data submission. Ten others will receive the survey.

The Colorado Division of Insurance’s survey draft probes company policy on unfair discrimination.4 Consider their question 4:

4. Which of your company's external data sources, predictive models, or insurance practices (for example, underwriting as a whole) are currently tested for Unfair Discrimination? Please provide a comprehensive list. For each item in the list, how often is it tested?

Presumably many carriers will eventually get a turn at the survey and data submission. The implication is that all carriers are now obligated to assess unfair discrimination in ECDIS and algorithms.

The data call will support testing for unfair discrimination by race. Initially, Colorado proposed a data structure to include name and address and underwriting decision (offer or decline). Colorado would impute race and conduct testing for unfair discrimination. Concern over transfer of Personally Identifiable Information led to a change for carriers themselves to conduct testing and report results. That brings us to the central question of ascertainment of race.

Colorado has emphasized an algorithm adapted from RAND Corporation called “Bayesian Improved First Name Surname Geocoding” (BIFSG).5 This model proposes racial group assignment from name and address.

Carriers may not want any link to race in their customer records. To comply with Colorado’s mandate to perform and report testing for unfair racial discrimination will necessitate use of an outside vendor to impute race.

The industry has expressed concern over distribution of the results of analysis for unfair racial discrimination.

While the mechanism of data production has taken form, interpretation has not. To make a determination of unfair discrimination requires a yardstick for racial group distribution. The yardstick will vary with the population analyzed. Consider that Colorado does not resemble the entire U.S.. Any method of ascertainment of race results in ambiguity and inaccuracy. Consider people who identify with multiple or uncommon races. BIFSG will misclassify some portion of people. Interpretation needs a threshold for deviation from the yardstick.

The ACLI model regulation established a principles-based framework that can align with actuarial professional responsibility to guide such elements of interpretation. In our opinion, that is preferable to enactment of universal rules.

NAIC

The other established process for evaluation of proxy discrimination is in the NAIC market conduct annual statement (MCAS). It is much less developed and detailed than Colorado’s statute.

Beginning in the 2024 MCAS for 2023, business interrogatories will include categorization of what NAIC has defined as accelerated underwriting or not. Despite the terminology, the crux of differentiation of accelerated underwriting in MCAS is the use of algorithms, models and consumer data rather than speed of decision. In the working discussions to draft the definitions and interrogatories the rationale expressed for attention to the process was to investigate unfair discrimination. Although in these interrogatories there is no assessment of protected class, the implication is that they will follow.

Conclusion

In multiple jurisdictions, legislators and regulators have turned their attention to discrimination of protected classes of consumers that may occur when insurers use data science and consumer data. Colorado and the NAIC MCAS have established concrete requirements for insurers to follow.

Gen Re believes that all insurers have an obligation to consider potential unfair discrimination that could be embedded in various practices.

Endnotes

- Concerning Protecting Consumers From Unfair Discrimination in Insurance Practices. 2021 Regular Session, 2021a_169_signed.pdf (colorado.gov). For a general synopsis of the legislation please see: https://leg.colorado.gov/bills/sb21-169; https://doi.colorado.gov/for-consumers/sb21-169-protecting-consumers-from-unfair-discrimination-in-insurance-practices. A “protected class” is a group of people who are protected against discrimination as a result of being a member of that class. The protected classes under the CO statute are “race, color, national or ethnic origin, religion, sex, sexual orientation, disability, gender identity, or gender expression”.

- NAIC, https://content.naic.org/sites/default/files/call_materials/D%20Cmte%20Materials%207.11.15.pdf, see page 40 (Attachment Four) for April 30, 2024 Filing Deadline, describing 2023 new business

- Colorado Stakeholder Meeting on Revised Statute (C.R.S.); section 10‑3‑1104.9, Executive Summary and Outline of ACLI’s Proposed Regulatory Framework; Effective date of regulation is January 1, 2023.

- https://doi.colorado.gov/for-consumers/sb21-169-protecting-consumers-from-unfair-discrimination-in-insurance-practices, July 8, 2022 Meeting Materials: DRAFT Life Insurance Survey – Governance and Testing Processes for Unfair Discrimination – DRAFT FOR DISCUSSION, https://drive.google.com/file/d/1eSGsOpEFhSBkBlM-BZCQUbWFHNbdiaOg/view

- Voicu, Ioan, Using First Name Information to Improve Race and Ethnicity Classification (February 22, 2016). Available at SSRN: https://ssrn.com/abstract=2763826; https://papers.ssrn.com/sol3/papers.cfm?abstract_id=2763826

Endnotes last accessed 15.11.2022.