-

Property & Casualty

Property & Casualty Overview

Property & Casualty

We offer a full range of reinsurance products and the expertise of our talented reinsurance team.

Trending Topics

Publication

Production of Lithium-Ion Batteries

Publication

PFAS – Rougher Waters Ahead?

Publication

Generative Artificial Intelligence in Insurance – Three Lessons for Transformation from Past Arrivals of General-Purpose Technologies

Publication

Did you Know? A Brief Reflection on La Niña and El Niño

Publication

Time to Limit the Risk of Cyber War in Property (Re)insurance

Publication

Pedestrian Fatalities Are on the Rise. How Do We Fix That? -

Life & Health

Life & Health Overview

Life & Health

We offer a full range of reinsurance products and the expertise of our talented reinsurance team.

Training & Education

Publication

When Actuaries Meet Claims Managers – Data-Driven Disability Claims Review

Publication

Chronic Pain and the Role of Insurers – A Multifactorial Perspective on Causes, Therapies and Prognosis

Publication

Fasting – A Tradition Across Civilizations

Publication

Alzheimer’s Disease Overview – Detection and New Treatments

Publication

Simplicity, Interpretability, and Effective Variable Selection with LASSO Regression Moving The Dial On Mental Health

Moving The Dial On Mental Health -

Knowledge Center

Knowledge Center Overview

Knowledge Center

Our global experts share their insights on insurance industry topics.

Trending Topics -

About Us

About Us OverviewCorporate Information

Meet Gen Re

Gen Re delivers reinsurance solutions to the Life & Health and Property & Casualty insurance industries.

- Careers Careers

Insurance Claims Anomaly Detection

April 23, 2024

Aloysius Lim

English

Introduction

Paying only for legitimate claims is important for insurance companies. Traditionally, insurance companies have relied on claims assessors to identify claims anomalies and ascertain the legitimacy of claims. The process can be plagued by a complicated system, one that involves laborious manual procedures. The industry, however, continues to evolve, with ongoing technological advancements and improvements in the way we extract insights from data. Using machine learning techniques, we can identify patterns that may be missed by claims assessors and improve the efficiency of their claims processing. This article will describe the types of claims data typically available and the corresponding machine learning techniques for claims anomaly detection.

Data Considerations

The number of features for insurance claims data is generally more limited when compared to anomaly detection problems in other contexts. Using autonomous driving as an example, data associated with anomaly detection are images. For a 256X256 image, the number of pixels amounts to 65536, which dwarfs the number of features we typically have in insurance data. Consequently, claims anomaly detection is generally more challenging.

One obvious solution is to collect more data. For health insurance claims, these data could be the hospital code, a doctor’s code, a drug prescription code, the cost of prescriptions, etc., data which are potentially predictive of an anomalous claim. However, this data strategy does not solve the immediate problem of having limited features for anomaly detection. We can make the best use of existing data by adopting the following strategies.

Data feature engineering

We can create more data features using existing claims data. For example, we can use our existing claims history database to derive the number of historical claims for a particular claimant at the point of making a new claim. It can be unusual for a claimant to be repeatedly claiming over a period. Creating such potentially predictive data features will enable the model to flag this claim.

Tapping into Large Language Models (LLMs)

We can derive meaningful representation of our textual data features by tapping into Large Language Models (LLMs), consequently deriving potentially predictive data features. For example, insurance companies would typically capture claims causes as free-text descriptions. We can capitalize on the rich clinical information captured in this text by leveraging LLMs, which have already been trained on vast amounts of data including medical- and insurance-related content.

An embedding is a numerical representation of words that capture its meaning and context in the form of a numeric vector. Drawing embeddings from an appropriate LLM, which better captures the contextual information of our text, will provide a better representation of the text data. For example, an LLM trained using medical literature produces embeddings which appropriately capture the semantic meaning of claims cause descriptions in the medical context.

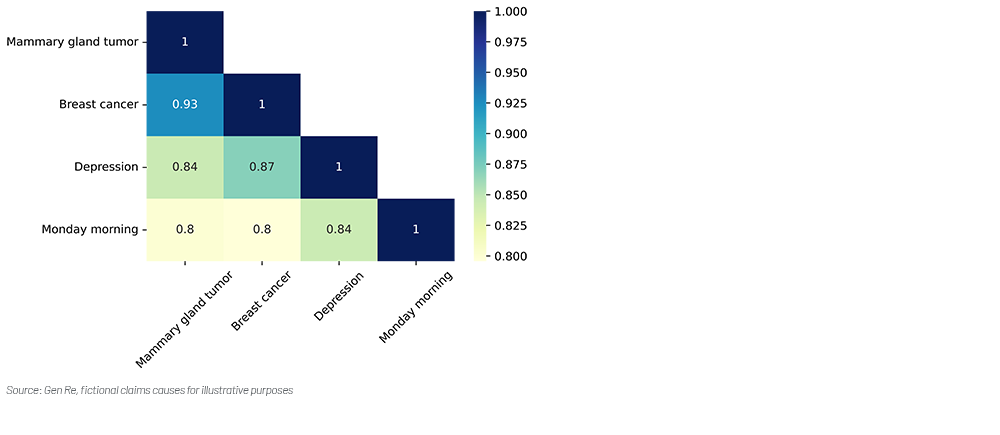

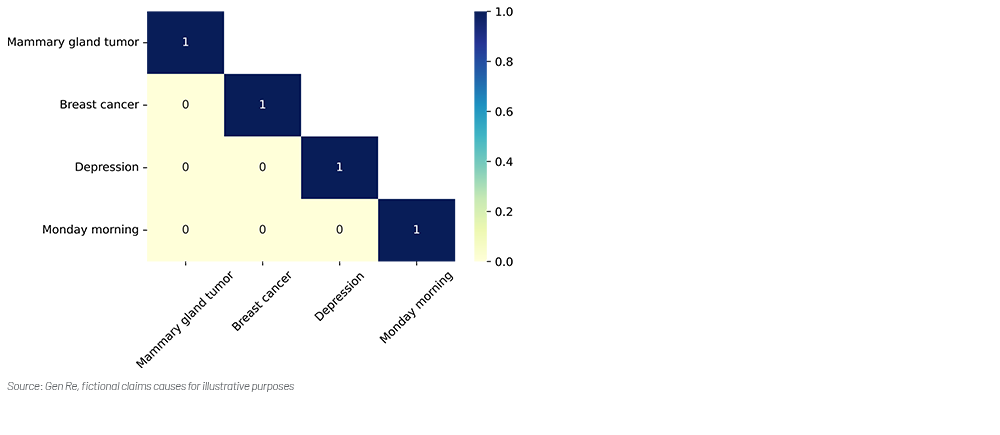

To illustrate capturing semantic meaning, we drew the embeddings from an LLM to derive the representation of the following texts in the form of a numeric vector for each claim cause.

- Mammary gland tumor

- Breast cancer

- Depression

- Monday morning

We would expect a good representation would identify that both mammary gland tumor and breast cancer have similar semantic meaning in the medical context. The comparison of representations for other texts have been provided for reference.

To measure the similarity of the textual representations in the form of numeric vectors, we use cosine similarity as the metric. Value “1” indicates perfect similarity, while “0” indicates no similarity.

Example: Mammary gland tumor and breast cancer

LLMs: Captured the contextual similarity evidenced by the high cosine similarity.

Figure 1 – Cosine similarities of text representation from Large Language Model (LLM)

We then contrast the representation using LLM against a one-hot encoding. One-hot encoding has been chosen as a comparison as it does not capture contextual information, unlike LLM. One-hot encoding simply represent each word as a binary vector of 1s and 0s, with the vector size being the total number of words.

Example: Mammary gland tumor and breast cancer

One-hot-encoding: Failed to capture the contextual similarity evidenced by the low cosine similarity.

Figure 2 – Cosine similarities of text representation from One-hot Encoding

Anomaly Detection Approaches

One key consideration on the selection of modelling approach will depend on whether the claims data has been “labelled”. Claims data can be broadly classified into three categories according to the types of labelling.

- Labelled data

Each claim would have been clearly labelled as an anomalous or a normal claim. - Non-labelled data

No information on whether each claim is anomalous or normal. - Partially labelled data

Only some claims have been labelled as anomalous or normal claims.

No information on whether each claim is anomalous for most of the claims.

In practice, it is rare that we have fully labelled claims data as such labelling are often hand-labelled by claim investigators, which can be tedious and labor-intensive. Furthermore, definition of an anomalous claim may not be as straight forward in certain scenarios.

- Clear fraud

For clear fraudulent claims investigated by claims investigators, it will be easy to label these claims as anomalous. The process of establishing that a claim is fraudulent can, however, be resource intensive. Consequently, it is harder to investigate every claim in the claim dataset and then fully label the entire claim dataset. - Soft fraud

The definition of a soft fraudulent claim can be ambiguous and subjective. A common cold with 14 days of hospitalization days can possibly be considered a soft abuse. How about when the number of hospitalization days drops to 7? What about 5? The lack of clear definition makes it challenging for us to hand-label the data as fraudulent. It is, however, the lack of clear definition that necessitates the development of models to detect anomalies.

Consequently, it is more common to work with non-labelled data or partially labelled data. Nevertheless, approaches to the analysis for all three categories of claims datasets have been set out here.

Non-Labelled Data – Unsupervised Learning

Since we do not know which claims are anomalous, we cannot train a model to learn the features associated with anomalous claims. We will have to rely on unsupervised approaches that use machine learning algorithms to discover patterns in data so we can investigate further if these are waste fraud and abuse cases. Examples of unsupervised approaches would include density-based or distance-based approaches to separate out the anomalous claims from the normal claims.

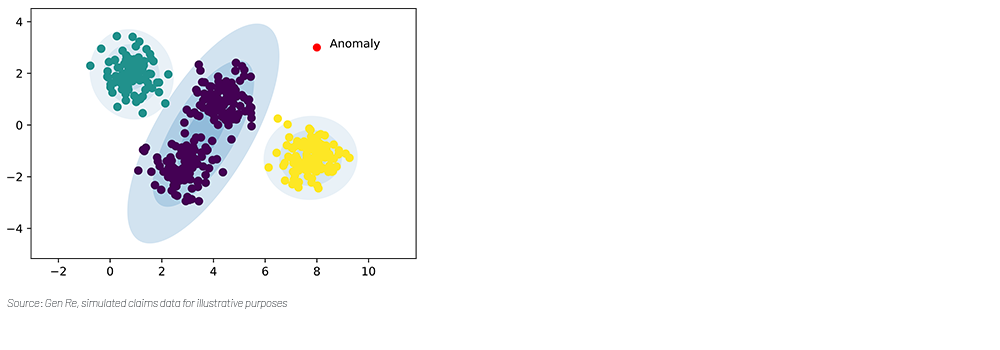

A density-based approach models the claims with some probabilistic models, such as a Gaussian mixture model. We can use such probabilistic models to assign density scores to claims. An anomalous claim would have a low probability of being a normal claim and would fall into the region of having low density as shown in Figure 3.

Figure 3 – Anomaly detection using Gaussian mixture model

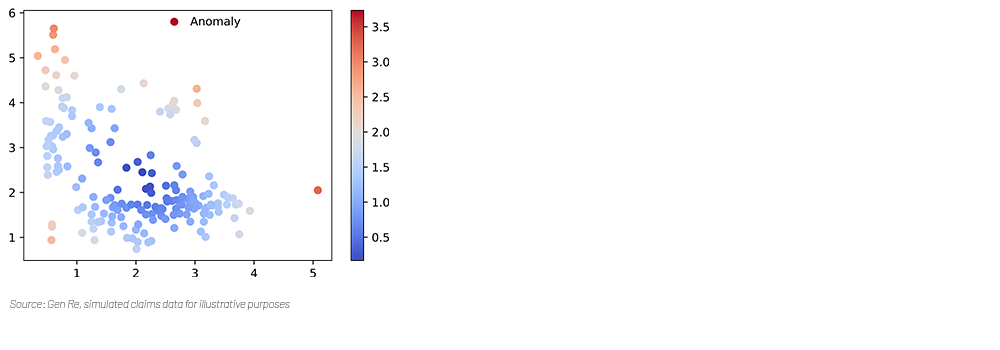

A distance-based approach considers the distances between claims, such as the Mahalanobis distance. The Mahalanobis distance takes into account the correlation among variables, which is a feature common in our claims data. The general idea is that an anomalous claim would be far away from normal claims. We can calculate the distances between such claims and use an appropriate algorithm to flag the anomalous claims. Figure 4 shows how an anomaly has been flagged using Mahalanobis distance.

Figure 4 – Anomaly detection using Mahalanobis distance

Partially Labelled Data – Semi-Supervised Learning

Claims assessors may have seen a subset of the claims and have flagged some of these claims as anomalous. While the labelling is not comprehensive, we can nevertheless make use of such limited information using a semi-supervised approach. A semi-supervised approach combines both supervised and unsupervised learning.

One example of a semi-supervised approach is to use a label propagation algorithm1 to create labels for non-labelled claims using the labelled claims. The underlying assumption is that claims with similar characteristics would have similar labels. When all such unlabeled claims have been labelled using the algorithm, then we can run a supervised model on a ‘fully’ labelled dataset and learn the characteristics of such anomalous claims.

Partially Labelled Data – Self-Supervised Learning

Another way we can use partially labelled data is to use a self-supervised approach. A self-supervised approach is a paradigm in machine learning where a model is trained without using any forms of labels, which is the key difference from semi-supervised learning. Instead, label-based information is used to extract supervisory signals from the data. These supervisory signals are then used in training.

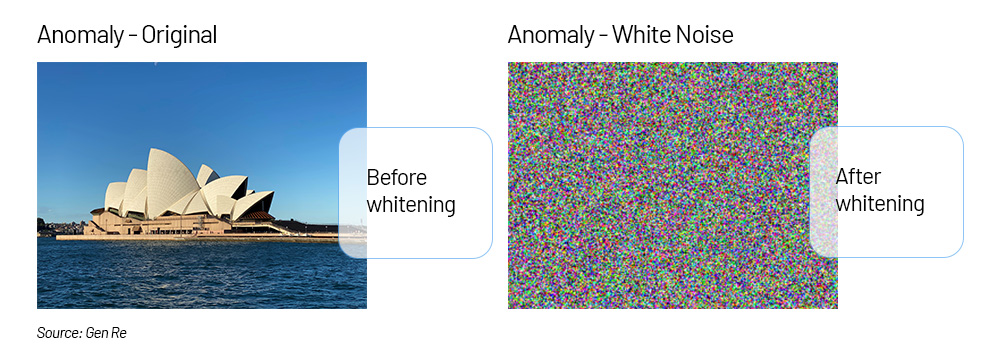

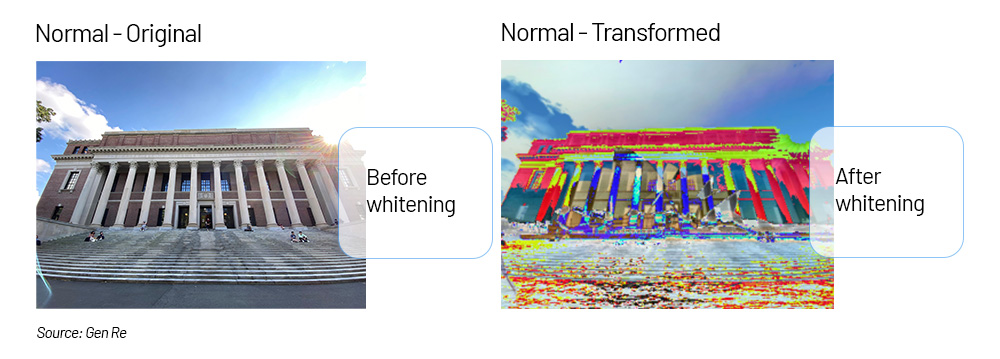

One example of how we can use a self-supervised approach is to use statistical whitening2 to transform the features of our claims data for modelling. Pioneering work for such transformation was in natural language processing tasks, but similar ideas can be applied to claims anomaly detection. Using information from our labelled data, we can whiten the features of the claims. The motivation of whitening is to normalize the anomalous claims into white noise while pushing the normal claim away from white noise to achieve the goal of increasing the contrast of the two for easier separation.

Using images to illustrate the idea:

- We labelled the images of Sydney Opera House as anomalies. After applying statistical whitening using characteristics of the Sydney Opera House, the anomalous image has been transformed into white noise as represented in Figure 5.

Figure 5 – Whitening transformation on an anomalous image

- Using characteristics of the Sydney Opera House, we apply similar statistical whitening to an image, which we define as normal. As this image is not like the Sydney Opera House (anomalous), the image after whitening becomes very different to white noise, as shown in Figure 6. The task of anomaly detection becomes an easier task of differentiating white noise from other non-white noise images.

Figure 6 – Whitening transformation on a normal image

Labelled Data – Supervised Learning

A supervised learning model uses the labels of data in training. With labelled claims data, we can train a supervised machine learning model to learn the characteristics of a fraudulent claim. The model will then be able to pick up fraudulent claims with similar characteristics. One example of a supervised model is a neural network. A neural network mimics the behavior of the human brain and offers the flexibility to have different architectures to model complex relationship.

The success of detecting fraudulent claim rests on the assumption that the labelled data is fully representative of fraudulent claims. In some cases, it is insufficient to fully characterize all notions of a fraudulent claim. It could be due to a distributional shift in characteristics of a fraudulent claim that causes previously labelled claims to be outdated. Fraudsters can adapt themselves and change the way fraudulent claims are made. Extra care should be taken in such cases.

Performance Evaluation

A performance evaluation is more straight-forward when we have labelled data and are using supervised approaches. Traditional metrics relating to Receiver Operating Characteristics (ROC) can be used as assessments.

In the absence of labelled data, we may have to rely on external validations. For example, a claims assessors can investigate a claim anomaly detected by the unsupervised model and validate if the claim is indeed an anomaly. Another validation approach is to introduce synthetic claim anomalies and check if the unsupervised model can flag these synthetic claims. One way to create synthetic claims anomalies would be to get domain experts to come up with claims that they would deem to be claims anomalies.

In general, a transparent and explainable model is important, enabling a clear understanding of why a claim has been flagged as anomalous. Validation from domain experts can then confirm the validity of the reasons provided. It is important to note that the model’s flagging of a claim as anomalous is often insufficient evidence to prove fraud. Typically, assessors must conduct further investigations to make the final determination. Through these investigations, the performance of the model can be further assessed, and necessary updates made. Continuous validation is crucial to ensure accuracy.

Conclusion

Insurance companies are in the business of paying legitimate claims, and differing forms of claims anomaly detection has always been an integral part of the business. Experienced claims professionals have played a key role in such review processes, and having a tool which is complementary to our existing review processes can bolster our lines of defense. The ability to leverage technology to harness the value of data for claims anomaly detection gives insurance companies a competitive advantage, or at the very least, prevents them from falling behind in the race to adopt technology in insurance.

Endnotes

- X. Zhu, Z. Ghahramani. “Learning from labeled and unlabeled data with label propagation”, CMU‑CALD‑02‑107, Carnegie Mellon University, 2002, https://api.semanticscholar.org/CorpusID:15008961. Accessed on 16 April 2024.

- A. Ermolov, A. Siarohin, E. Sangineto, N. Sebe, “Whitening for Self-Supervised Representation Learning”, https://doi.org/10.48550/arXiv.2007.06346. Accessed on 16 April 2024.